Course overview

Aim of this course

-

This course will provide you will an introduction to the functioning of modern deep learning systems.

-

You will learn about the underlying concepts of modern deep learning systems like automatic differentiation, neural network architectures, optimization, and efficient operations on systems like GPUs.

-

To solidify your understanding, along the way (in your homeworks), you will build (from scratch) all the basic architectures and models like a fully connected model or a CNN for image classification.

Why Study Deep learning

Here is a list of important Breakthroughs that uses Deep learning.

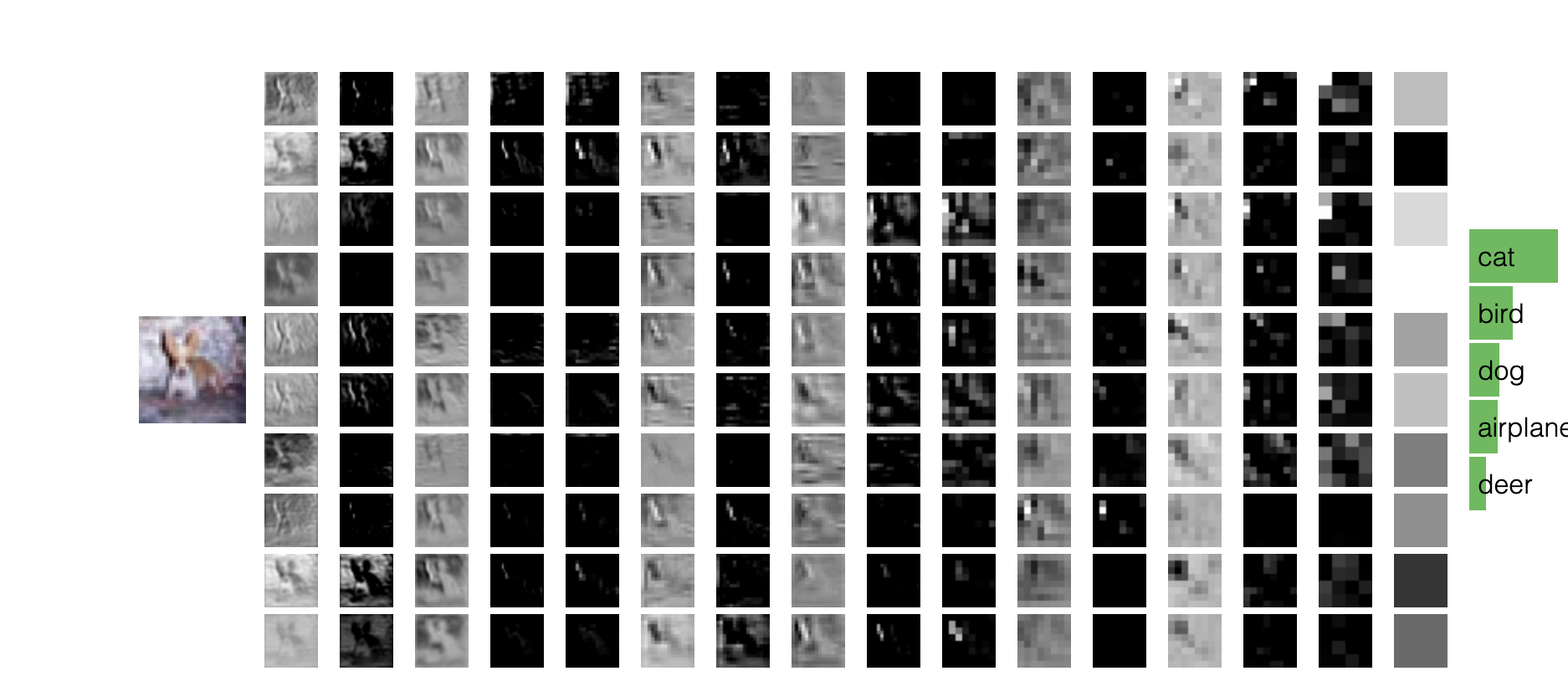

- Image Classification

AlexNet 2012 Paper by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton

Figure 1: Visualization of AlphaGo Process

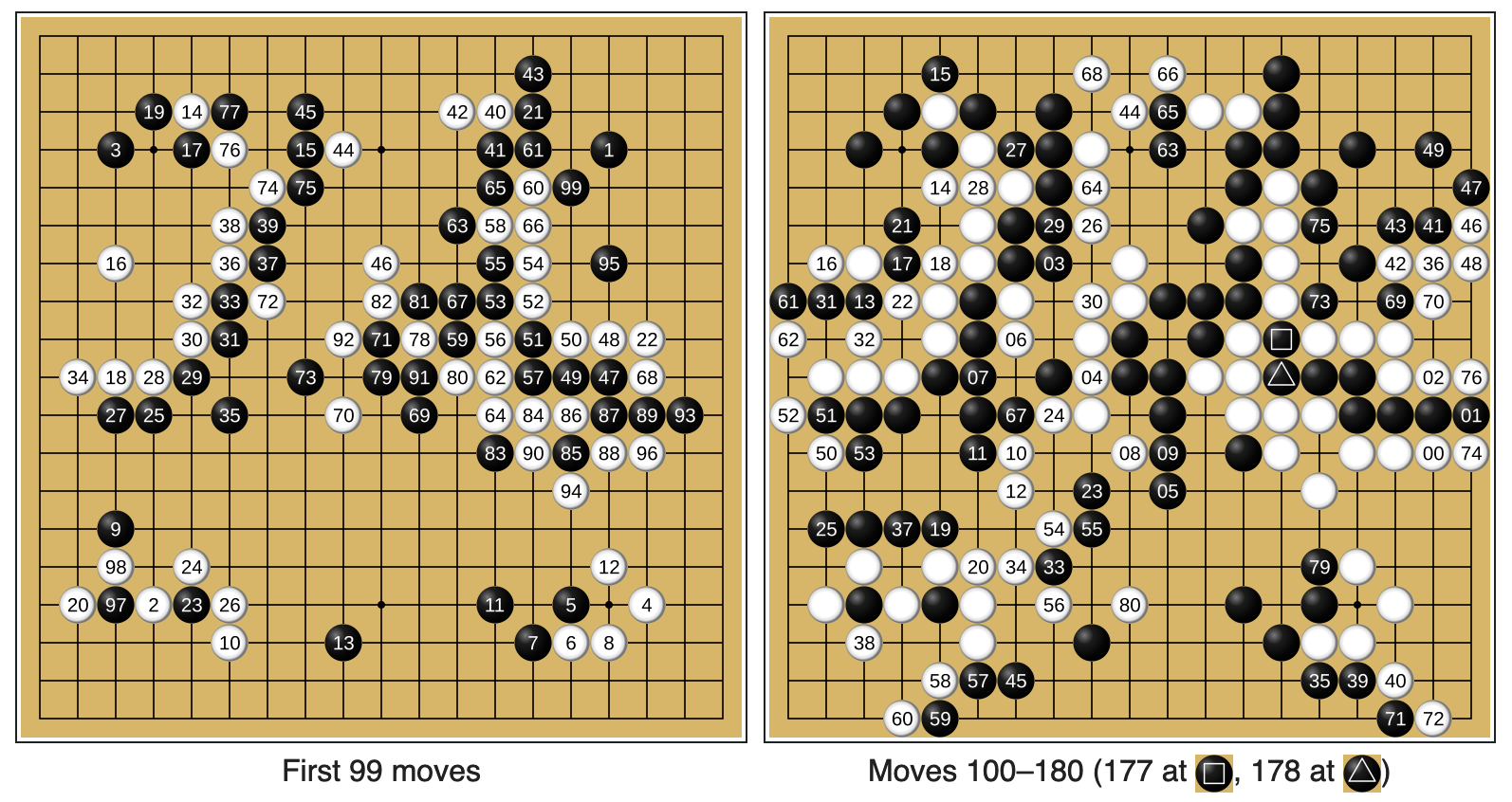

AlphaGo 2016 Paper by David Silver, Aja Huang, Chris J. Maddison, et al.

Figure 1: Visualization of Diffusion Model Process

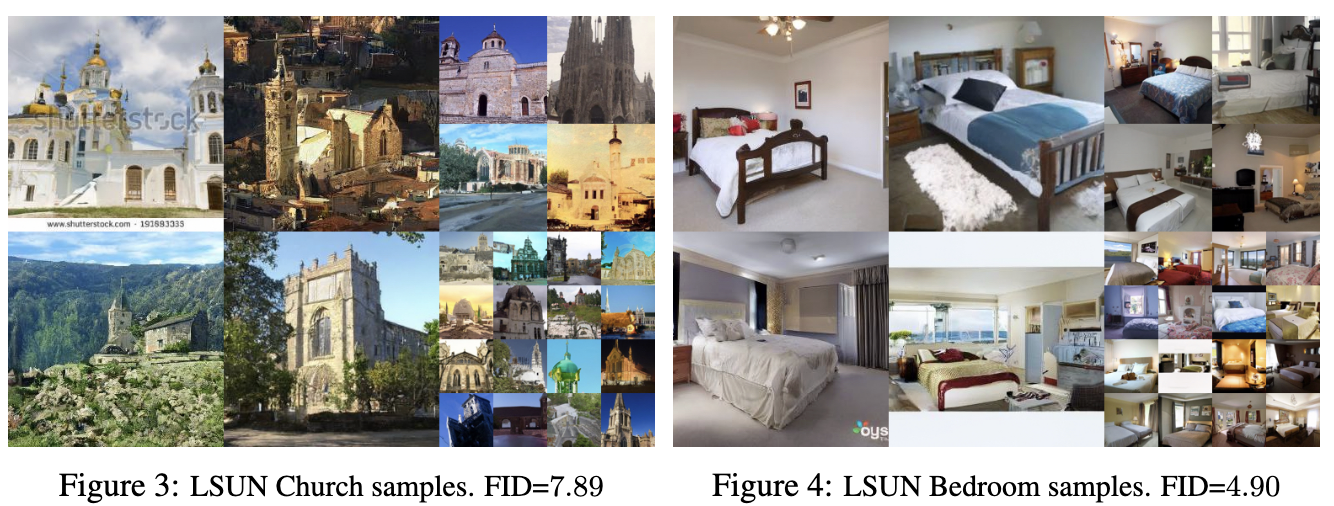

Diffusion Models Paper (2020) by Jonathan Ho, Ajay Jain, Pieter Abbeel

Why Study Deep learning

Deep learning has revolutionized the field of artificial intelligence by enabling machines to learn from vast amounts of data, often surpassing human capabilities in complex tasks. By studying deep learning, we can unlock the potential to solve some of the most challenging problems across various domains, including natural language processing, computer vision, healthcare, and scientific research. Deep learning models are the backbone of many groundbreaking applications that are transforming industries, enhancing productivity, and creating new possibilities for innovation. Understanding deep learning is essential for anyone looking to contribute to the future of technology, as it holds the key to advancements in automation, personalized services, and the development of intelligent systems that can adapt, evolve, and improve over time.

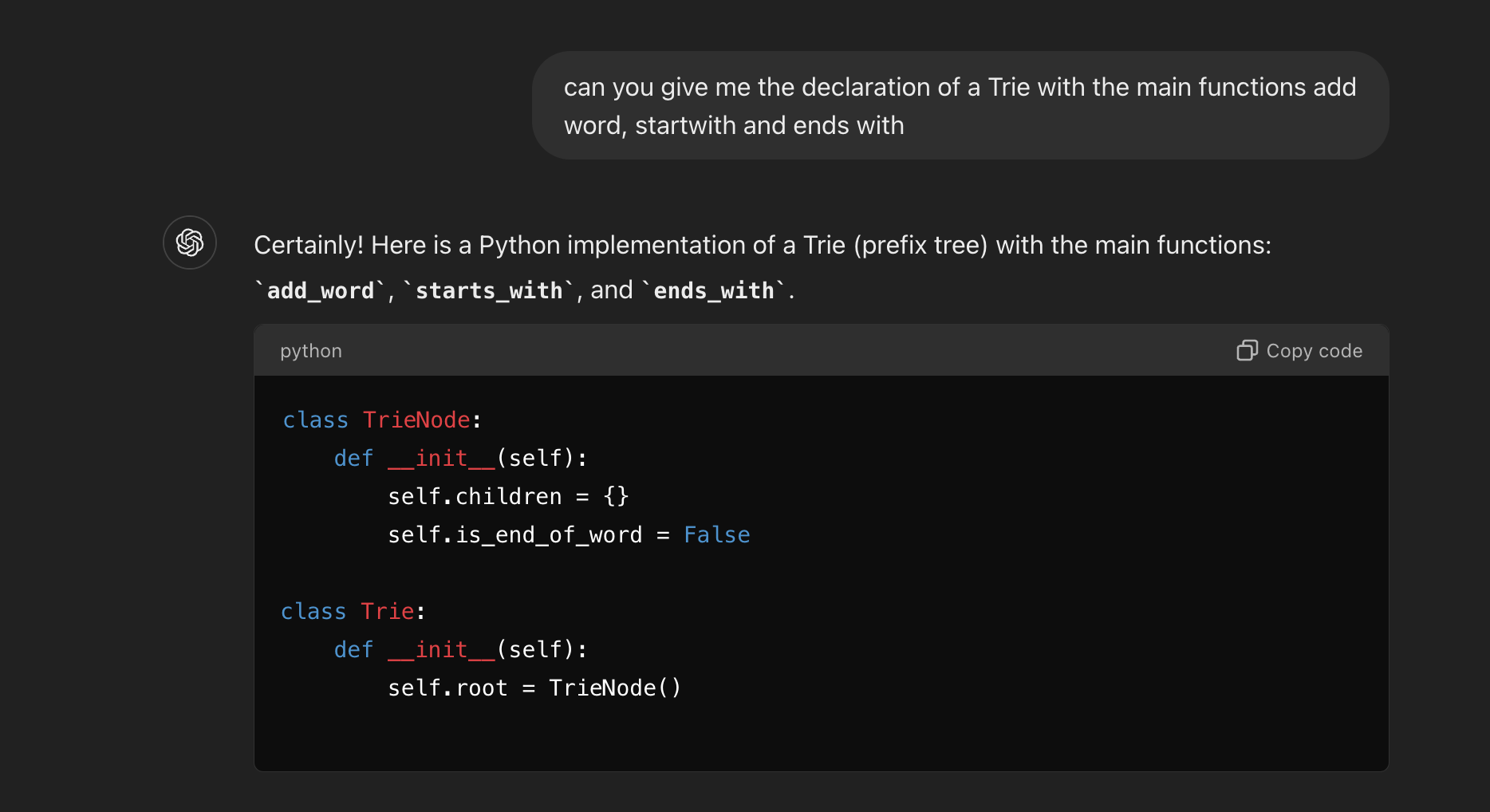

- ChatGpt

Figure 1: ChatGPT – Revolutionizing Natural Language Processing

ChatGPT 2022 Paper by Tom B. Brown, Benjamin Mann, Nick Ryder, et al.

ChatGPT, powered by OpenAI’s GPT-3, has revolutionized how machines understand and generate human language. This model, which is based on deep learning techniques, has demonstrated the ability to perform a wide range of tasks, from writing essays to answering complex questions, making it a powerful tool in natural language processing.

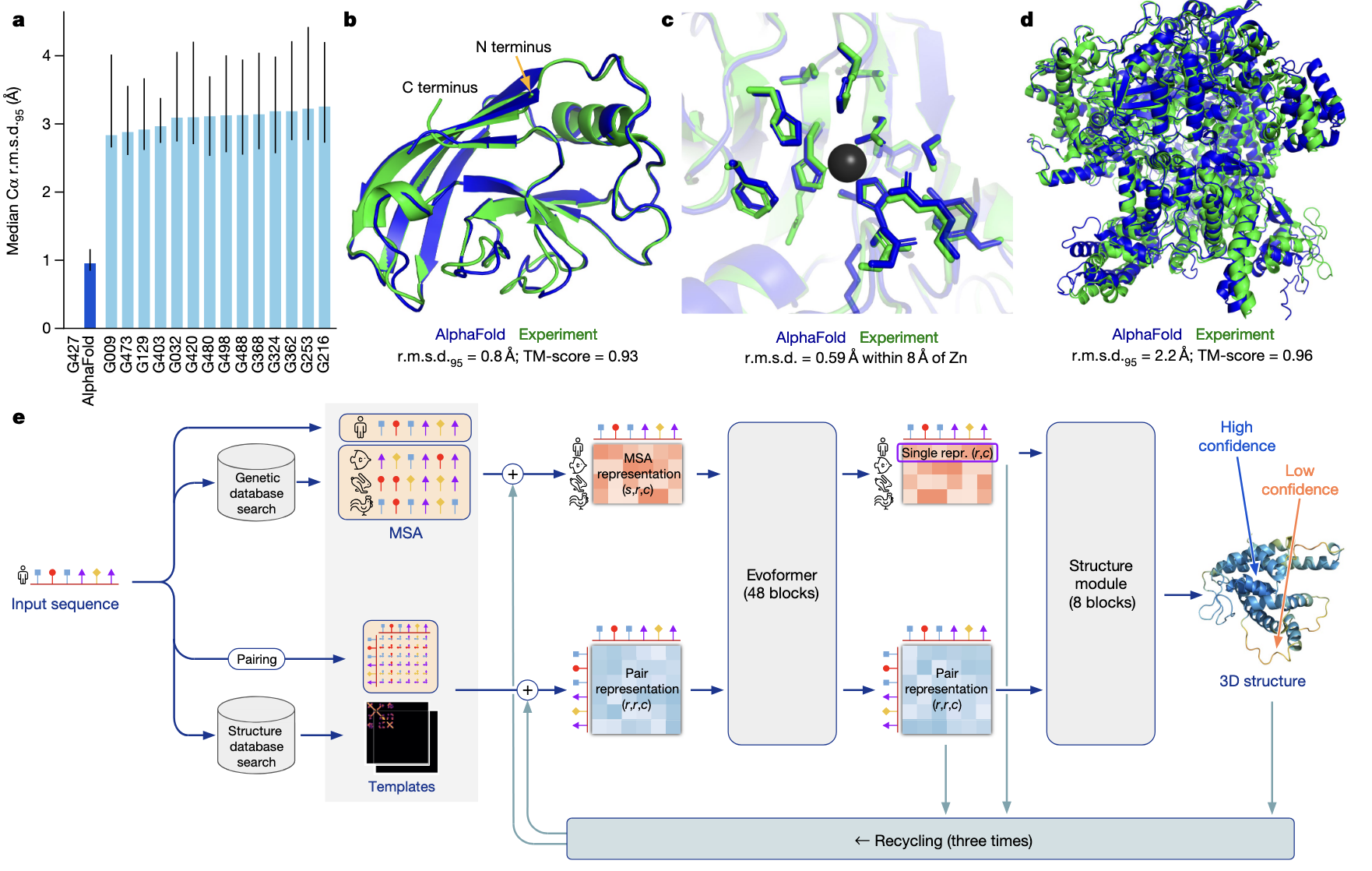

- AlphaFold 2 (2021)

Figure 2: AlphaFold 2 – Advancing Protein Structure Prediction

AlphaFold 2 Paper (2021) by John Jumper, Richard Evans, Alexander Pritzel, et al.

AlphaFold 2, developed by DeepMind, represents a major breakthrough in the field of bioinformatics. By applying deep learning, AlphaFold 2 was able to predict protein structures with unprecedented accuracy, addressing a fundamental challenge in biology that had remained unsolved for decades.

- Stable Diffusion (2022)

Figure 3: Stable Diffusion – Transforming Image Generation

Stable Diffusion Paper (2022) by Robin Rombach, Andreas Blattmann, Dominik Lorenz, et al.

Stable Diffusion is an advanced generative model that leverages diffusion processes to create high-quality images from text descriptions. This innovation in deep learning allows for the generation of stunning visuals and has opened new avenues in art, design, and media production. The image is generated by the prompt “Portrait de style Renaissance d’un astronaute dans l’espace, fond étoilé détaillé, casque réfléchissant.”

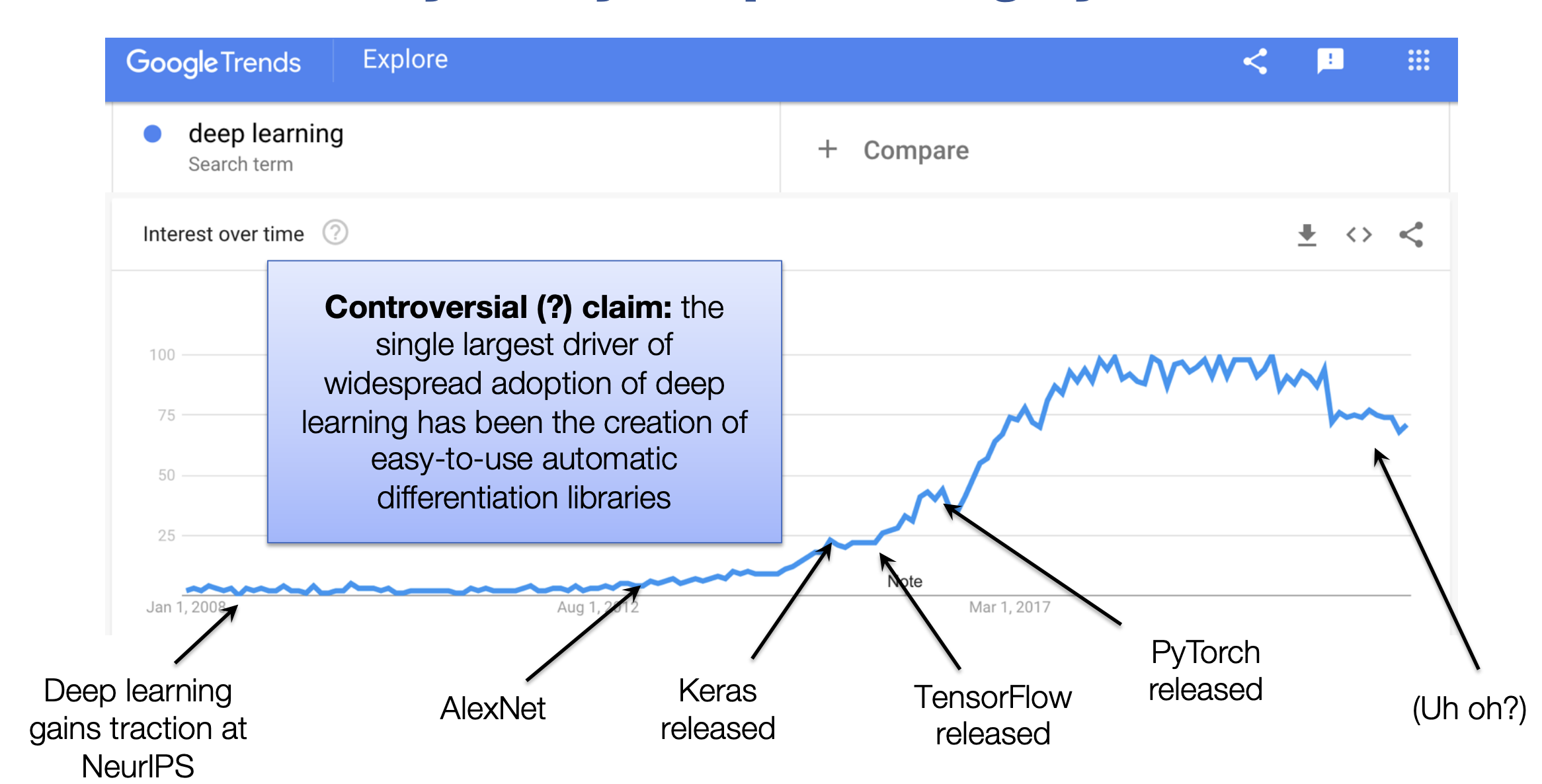

Trends in Deep Learning from 2008 to Present

Deep learning has undergone significant evolution since 2008, marked by key milestones that have shaped the field. The early years saw the resurgence of neural networks with the advent of powerful GPUs, leading to the development of AlexNet in 2012, which demonstrated the potential of deep learning in image recognition. This breakthrough ushered in the modern era of deep learning, followed by the creation of Keras in 2015, which made building and experimenting with neural networks accessible to a broader audience through its user-friendly interface. The release of TensorFlow in the same year by Google provided a robust, scalable platform for deploying deep learning models in production environments. More recently, PyTorch, introduced in 2016, has become a favorite among researchers and developers for its flexibility and ease of use, enabling rapid prototyping and experimentation. These tools and frameworks have been instrumental in advancing deep learning, leading to its widespread adoption across industries and its integration into cutting-edge technologies.

Figure 3: Trends for Deep learning

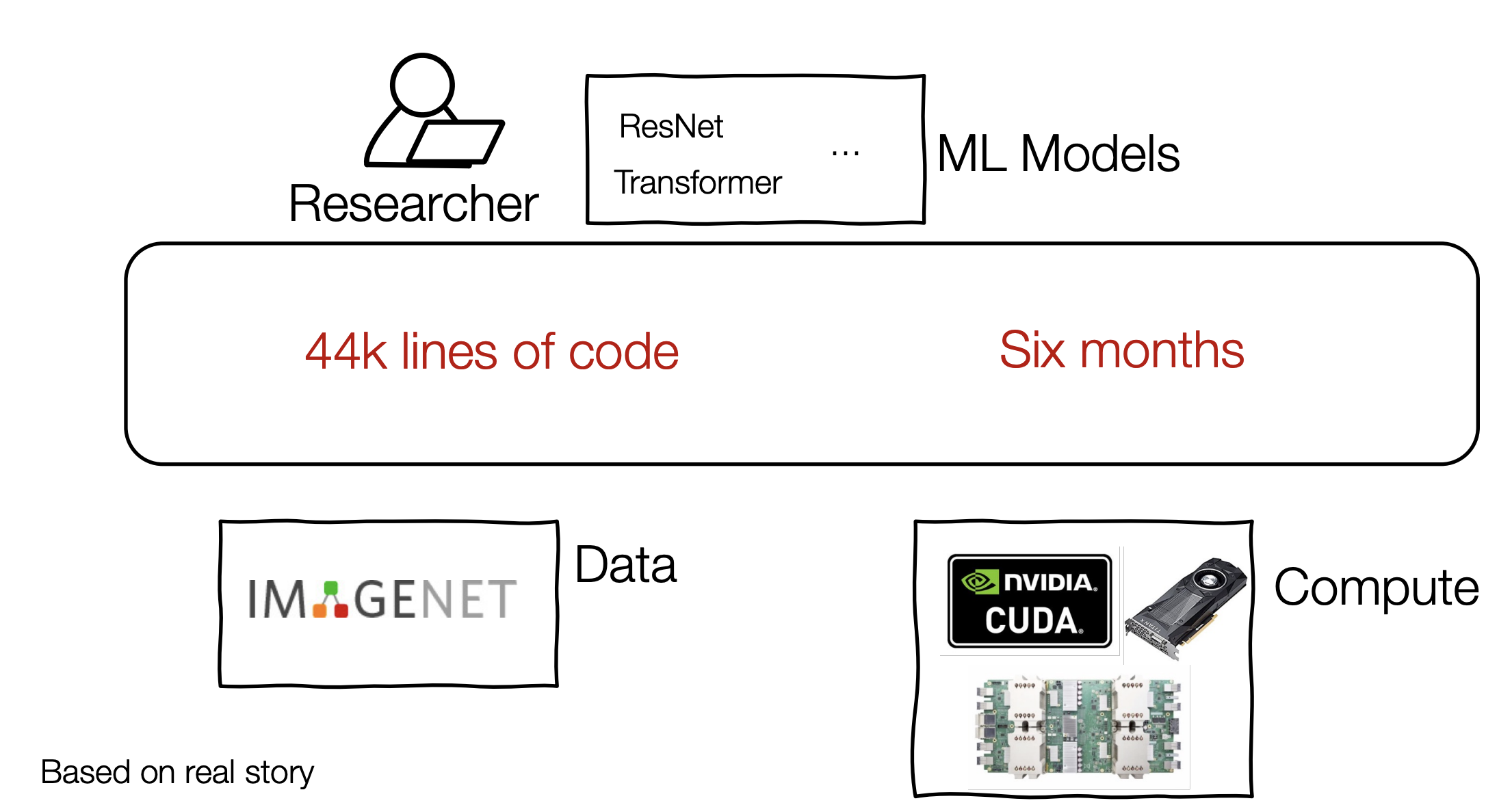

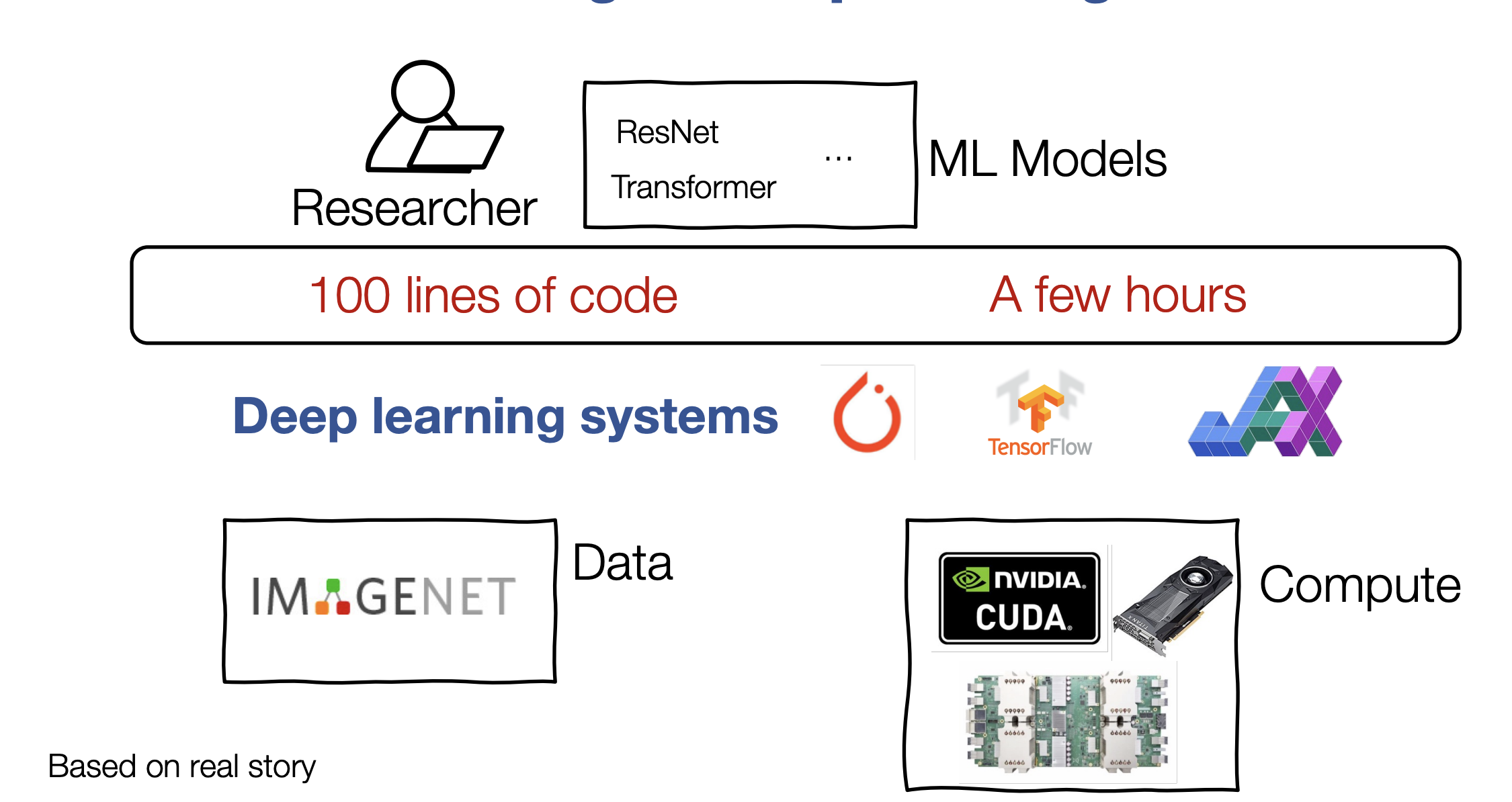

Evolution of Deep learning libraries

- In the first era, working on deep learning involve writing heavy code to define your model and specification and spending an important amount of time for training.

- But now, things have really changed with some automatic libraries like Torch lightning

Overview of the course:

In this course, we will try to touch on the following chapters and points:

- Machine Learning Refresher.

- Back propagation and automatic differentiation.

- Neural Networks: Architecture

- Neural Networks: Data and the loss

- Neural Networks: Data and the loss

- Neural Networks: Learning and Evaluation.

- Convolutional neural Networks

- Classical Models zoology

- Recurrent Neural Networks.